Professionals using AI in their businesses are asking questions that practical training alone cannot answer. Questions about data privacy. About regulatory compliance. About what crosses legal or ethical lines when implementing AI in real-world operations.

These are not beginner questions. They come from people already using AI at scale who want to do so responsibly.

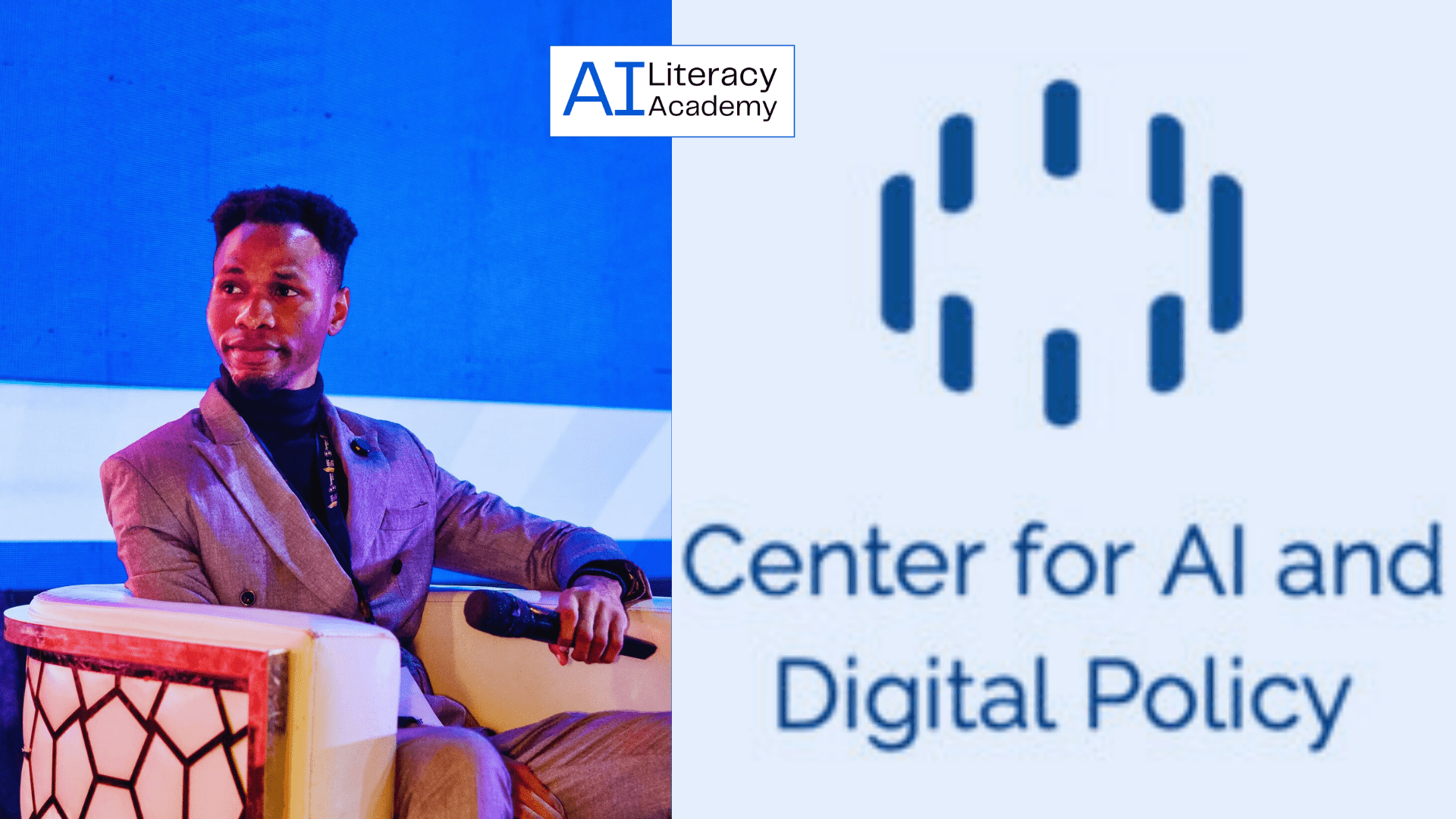

Fii Stephen, founder of AI Literacy Academy, has been accepted into the Center for AI and Digital Policy (CAIDP) AI Policy Clinic for Spring 2026. This is a globally recognized program that works directly with the United Nations, UNESCO, the European Union, and governments worldwide on AI governance frameworks.

This represents a strategic expansion of what AI Literacy Academy can offer: not just how to use AI tools effectively, but how to navigate the regulatory and ethical landscape surrounding them.

The Gap Between Policy and Practice

Over the past two years, AI Literacy Academy has trained more than 800 professionals across 13 countries in practical AI implementation. The focus has been clear: how to use AI tools, design workflows, and apply automation in business and workplace environments.

But as adoption has accelerated, a pattern emerged. Participants were not only asking how to use AI. They were asking what they were legally allowed to do with it.

What data is safe to input into AI systems? What regulations apply to their specific industry or region? What risks do they assume as professionals implementing AI in their organizations? Where is the line between effective use and regulatory violation?

These questions sit at the intersection of technology, law, ethics, and public policy. And there is a growing gap between the people writing AI policies and the people expected to comply with them in real-world environments.

That gap creates confusion, risk, and implementation paralysis. Businesses want to adopt AI responsibly but lack clear guidance on what responsible adoption actually means in practice.

What the CAIDP AI Policy Clinic Provides

The Center for AI and Digital Policy operates at the highest levels of AI governance. The organization works with the United Nations, UNESCO, the European Union, and national governments to shape how AI is regulated globally.

Through the AI Policy Clinic, participants study alongside researchers and policy experts from around the world, engaging directly with frameworks that are shaping AI regulation:

The EU AI Act. Europe’s comprehensive regulatory framework categorizing AI systems by risk level and establishing compliance requirements.

OECD AI Principles. International standards for responsible AI stewardship adopted by 46 countries.

UNESCO AI Ethics Recommendations. The first global standard-setting instrument on AI ethics, approved by all 193 UNESCO member states.

This is not theoretical study. It is direct engagement with the policy structures that businesses and professionals must navigate as AI adoption becomes regulated activity rather than experimental technology.

Bridging Implementation and Governance

The acceptance into CAIDP’s AI Policy Clinic positions AI Literacy Academy to address the gap that has emerged in professional AI education: the disconnect between knowing how to use AI and understanding the regulatory context in which it operates.

Professionals need both. Implementation expertise without governance awareness creates compliance risk. Policy knowledge without practical application experience produces unusable guidance.

Fii Stephen’s work with CAIDP will focus on bridging that divide. Connecting the frameworks being developed at the policy level with the questions being asked by professionals implementing AI in real business contexts.

By the end of the program, AI policy education will be integrated into AI Literacy Academy’s curriculum. Participants will learn not only how to use AI tools and design workflows, but also how to assess legal and ethical risks, make informed decisions about data handling, and operate within emerging regulatory frameworks.

Why This Matters Now

AI regulation is no longer theoretical or distant. The EU AI Act became law in 2024. Countries across Africa, Asia, and Latin America are developing their own frameworks. Businesses operating internationally must navigate multiple regulatory regimes simultaneously.

Professionals who understand both the capabilities of AI and the constraints placed on its use will have a distinct advantage. They can implement AI confidently because they understand not just what is possible, but what is permissible.

AI Literacy Academy has always operated on the principle that AI literacy means practical, applicable knowledge, not abstract theory. That principle now extends to governance. Learning how to prompt AI is essential. So is understanding what you can legally do with the output.

The professionals who thrive in the next phase of AI adoption will not be those who know the most prompts or the most tools. They will be those who can make informed decisions about when to use AI, how to use it responsibly, and what risks they are managing in the process.

What Comes Next

This represents the beginning of a broader evolution in how AI Literacy Academy approaches professional AI education. Practical skills remain foundational. But comprehensive AI literacy now requires understanding the governance structures that shape how those skills can be applied.

The goal has not changed: to help professionals use AI responsibly, confidently, and effectively in real-world settings. What has changed is the recognition that responsibility now requires policy literacy alongside technical capability.

As governments scramble to regulate AI and businesses navigate compliance uncertainty, someone needs to translate between policy frameworks and practical implementation. The professionals who shape AI’s future won’t just know how to use it. They’ll understand how it should be governed.

Discover how AI Literacy Academy equips professionals with both practical AI skills and governance literacy for responsible implementation. Learn more at ailiteracyacademy.org.